Understanding what makes a robust algorithm

When fitting a model to a data set, we would like to be certain that we are recovering the ground truth. In most cases, we do not get a good fit at first, and we need to decide what to do next. Some increase the sample size. Some change the optimization algorithm or the mathematical model and assumptions. Some conclude that there is not enough signal and resample with a higher precision or from a different population. Which of these choices is the right one?

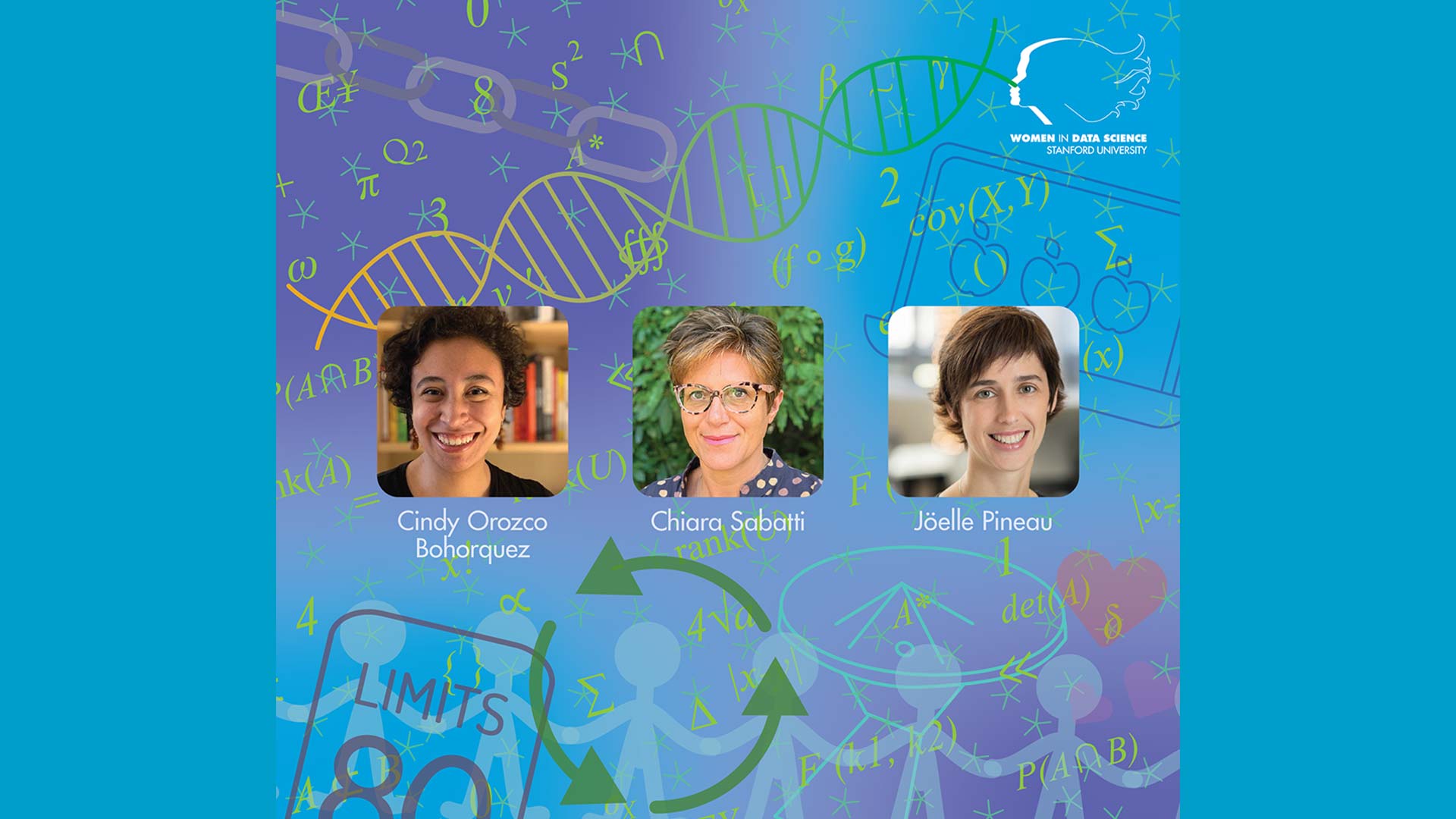

Cindy Orozco Bohorquez, a data scientist at Cerebras Systems with a PhD from Stanford’s Institute of Computational and Mathematical Engineering, delivered a talk to address the question What does it mean to have a robust algorithm? She examines this question for a classical problem in computer graphics and satellite communication called point-set registration. She combines results from statistics, optimization, and differential geometry to compare the solutions given by these algorithms. As a result, she provides a direct mapping between the rate of success of each algorithm and the peculiarities of different datasets.

How to build reproducible, reusable and robust machine learning systems

Reproducibility is the ability for a researcher to duplicate the results of a previous study using the same materials as were used by the original investigator. Results can vary significantly given minor perturbations in the task specification, data or experimental procedure. This is a major concern for anyone interested in using machine learning in real-world applications.

Jöelle Pineau, Co-Managing Director at Facebook AI Research and Associate Professor of McGill University discusses this challenge in her WiDS talk, Building Reproducible, Reusable, and Robust Deep Reinforcement Learning Systems. She describes challenges that arise in experimental techniques and reporting procedures in deep learning, with a particular focus on reinforcement learning and applications to healthcare. She explains several recent results and guidelines designed to make future results more reproducible, reusable and robust. One of the tools she describes is a machine learning reproducibility checklist which is a systematic way to make sure that you are documenting all the relevant aspects of the work that is being presented. This helps make sure that the papers are thorough in documenting the components they include and helps reviewers evaluate the completeness of the papers.

Improving how we communicate scientific findings

When large comprehensive datasets are readily available in digital form, scientists engage in data analysis before formulating precise hypotheses, with the goal of exploring and identifying patterns. What is the difference between one of these initial findings and a “scientific discovery”? How do we communicate the level of uncertainty associated with each finding? How do we quantify its level of corroboration and replication? What can we say about its generalizability and robustness?

Chiara Sabatti, Professor of Biomedical Data Science and Statistics at Stanford University discusses addresses these questions in her WiDS talk Replication, Robustness, and Interpretability: Improving How We Communicate Scientific Findings. She describes these challenges from the vantage point of genome-wide association studies. She reviews some classical approaches to quantifying the strength of evidence, identifying some of their limitations, and exploring novel proposals. She underscores the connections between clear, precise reporting of scientific evidence and social good.

Summary

The robustness, reproducibility and interpretability of algorithms is critical to ensure everyone can produce consistently high-quality science and reliable findings. We need to work together to employ best practices to ensure we can duplicate the results of a previous study. As Joelle Pineau noted in her talk, science should not be considered a competitive sport. Researchers need to commit to the idea that science is a collective institution where everyone works together to advance our collective creation and exploration.